Introduction

Redis has become essential infrastructure for modern applications requiring fast data access. Docker containerization simplifies Redis deployment while introducing considerations specific to ephemeral, networked environments. This guide covers complete Redis container deployment from basic single-instance configurations through production-ready clusters.

Containerized Redis serves diverse roles including session stores, caching layers, message brokers, and real-time analytics backends. Each use case demands specific configuration choices regarding persistence, memory management, and networking. Understanding these tradeoffs enables appropriate deployments matching application requirements.

Docker’s isolation benefits accelerate deployment and simplify configuration management while introducing network latency and storage considerations. We examine best practices that leverage container advantages while mitigating inherent tradeoffs.

Basic Redis Container Deployment

Single-instance Redis containers provide straightforward entry points for development and production deployments. Understanding fundamental deployment patterns establishes foundations for advanced configurations.

Running Your First Redis Container

The official Redis image provides minimal configuration suitable for initial deployments:

# Run basic Redis container

docker run -d \

--name redis-server \

-p 6379:6379 \

redis:7-alpine

# Verify container is running

docker ps | grep redis

# Test connection

docker exec redis-server redis-cli ping

# Access Redis CLI interactively

docker exec -it redis-server redis-cliThe Alpine-based image minimizes size while providing full Redis functionality. Default configuration suits many workloads without modification. The container exposes port 6379 for client connections.

Persistent Storage Configuration

Redis persistence requires careful configuration in containerized environments. Append-only file (AOF) persistence provides better durability than snapshot (RDB) persistence:

# Run Redis with AOF persistence

docker run -d \

--name redis-persistent \

-p 6379:6379 \

-v redis-data:/data \

redis:7-alpine \

redis-server --appendonly yes \

--appendfsync everysec \

--auto-aof-rewrite-percentage 100 \

--auto-aof-rewrite-min-size 64mbVolume mounts preserve data across container restarts. Docker manages volume lifecycle separately from containers, ensuring persistence when containers stop or restart. Verify volume mounting with docker inspect.

# Verify volume mount

docker inspect redis-persistent | jq '.[0].Mounts'

# Check AOF file exists

docker exec redis-persistent ls -la /dataMultiple persistence strategies serve different recovery requirements:

# RDB snapshot every 15 minutes if at least 1 key changed

docker run -d \

--name redis-rdb \

-p 6379:6379 \

-v redis-data:/data \

redis:7-alpine \

redis-server \

--save 900 1 \

--save 300 10 \

--save 60 10000 \

--stop-writes-on-bgsave-error no \

--rdbcompression yes \

--dbfilename dump.rdbCombine RDB and AOF for point-in-time recovery options. RDB provides faster recovery while AOF ensures no data loss since the last snapshot.

Memory Management in Containers

Containerized Redis requires explicit memory limits preventing resource exhaustion:

# Run with memory limit

docker run -d \

--name redis-limited \

-p 6379:6379 \

--memory=512m \

--memory-swap=512m \

redis:7-alpine \

redis-server \

--maxmemory 256mb \

--maxmemory-policy allkeys-lruMemory limits prevent containers from consuming excessive host resources. Swap limits prevent memory overcommitment. The maxmemory Redis setting controls actual data storage, leaving headroom for Redis overhead.

Eviction policies determine behavior when memory limits approach:

# All keys evicted using LRU (Least Recently Used)

--maxmemory-policy allkeys-lru

# Volatile keys evicted using LRU

--maxmemory-policy volatile-lru

# All keys randomly evicted

--maxmemory-policy allkeys-random

# Volatile keys randomly evicted

--volatile-random

# Evict keys using TTL (Least Recently Expired)

--volatile-ttl

# No eviction (writes fail when full)

--maxmemory-policy noevictionSelect policies based on whether all keys are candidates for eviction or only those with expiration times.

Redis Configuration Management

Containerized deployments require systematic configuration management addressing security, performance, and operational requirements.

Configuration Files and Overrides

Mount custom configuration files while preserving image defaults:

# Create custom redis.conf

cat > redis.conf <<EOF

bind 0.0.0.0

port 6379

protected-mode no

tcp-backlog 511

timeout 0

tcp-keepalive 300

daemonize no

supervised no

pidfile /var/run/redis/redis-server.pid

loglevel notice

logfile ""

databases 16

always-show-logo no

set-proc-title yes

stop-writes-on-bgsave-error yes

rdbcompression yes

rdbchecksum yes

dbfilename dump.rdb

dir /data

maxmemory 256mb

maxmemory-policy allkeys-lru

appendonly yes

appendfilename "appendonly.aof"

appendfsync everysec

no-appendfsync-on-rewrite no

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

aof-load-truncated yes

aof-use-rdb-preamble yes

lazyfree-lazy-eviction no

lazyfree-lazy-expire no

lazyfree-lazy-server-del no

slave-lazy-flush no

lazyfree-lazy-user-del no

EOFMount configuration files while allowing command-line overrides:

# Run with custom configuration

docker run -d \

--name redis-custom \

-p 6379:6379 \

-v $(pwd)/redis.conf:/usr/local/etc/redis/redis.conf:ro \

-v redis-data:/data \

redis:7-alpine \

redis-server /usr/local/etc/redis/redis.confConfiguration management tools like Ansible or Chef can generate Redis configurations dynamically based on deployment requirements.

Security Configuration

Redis lacks built-in encryption, requiring network isolation and authentication for production deployments:

# Enable authentication

docker run -d \

--name redis-secure \

-p 6379:6379 \

redis:7-alpine \

redis-server \

--requirepass "$(cat /run/secrets/redis-password)"

# Set master password for replication

docker run -d \

--name redis-master \

-p 6379:6379 \

redis:7-alpine \

redis-server \

--requirepass master-password \

--masterauth master-passwordPasswords should come from secrets management systems rather than command-line arguments. Docker secrets provide secure credential distribution:

# Create Docker secrets

echo "complex-password" | docker secret create redis_password -

# Deploy stack with secrets

docker stack deploy -c redis-stack.yml redisNetwork Isolation

Docker networks provide isolation for Redis containers:

# Create dedicated network

docker network create redis-network

# Run Redis on dedicated network

docker run -d \

--name redis-app \

--network redis-network \

redis:7-alpineApplication containers connect through the Docker network using container names as hostnames. Docker’s embedded DNS resolves service names to container IP addresses.

Docker Compose Redis Deployments

Docker Compose simplifies multi-container Redis deployments with declarative configuration:

# docker-compose.yml

version: '3.8'

services:

redis:

image: redis:7-alpine

container_name: redis-server

ports:

- "6379:6379"

volumes:

- redis-data:/data

- ./redis.conf:/usr/local/etc/redis/redis.conf:ro

command: redis-server /usr/local/etc/redis/redis.conf

deploy:

resources:

limits:

memory: 512M

reservations:

memory: 256M

healthcheck:

test: ["CMD", "redis-cli", "ping"]

interval: 10s

timeout: 5s

retries: 5

volumes:

redis-data:Health checks enable orchestration platform integration for reliable service discovery:

# Deploy stack

docker stack deploy -c docker-compose.yml myredis

# Verify service health

docker service ls

docker service ps myredis_redisRedis Cluster in Docker

Production deployments often require Redis clusters providing high availability and horizontal scaling.

Manual Cluster Setup

Creating Redis clusters requires coordination among multiple containers:

# Create Docker Compose for cluster

cat > docker-compose-cluster.yml <<'EOF'

version: '3.8'

services:

redis-node-1:

image: redis:7-alpine

container_name: redis-node-1

ports:

- "7001:6379"

volumes:

- redis-node-1:/data

command: |

redis-server

--port 6379

--cluster-enabled yes

--cluster-config-file /data/nodes.conf

--cluster-node-timeout 5000

--appendonly yes

networks:

redis-cluster:

ipv4_address: 172.28.0.11

redis-node-2:

image: redis:7-alpine

container_name: redis-node-2

ports:

- "7002:6379"

volumes:

- redis-node-2:/data

command: |

redis-server

--port 6379

--cluster-enabled yes

--cluster-config-file /data/nodes.conf

--cluster-node-timeout 5000

--appendonly yes

networks:

redis-cluster:

ipv4_address: 172.28.0.12

redis-node-3:

image: redis:7-alpine

container_name: redis-node-3

ports:

- "7003:6379"

volumes:

- redis-node-3:/data

command: |

redis-server

--port 6379

--cluster-enabled yes

--cluster-config-file /data/nodes.conf

--cluster-node-timeout 5000

--appendonly yes

networks:

redis-cluster:

ipv4_address: 172.28.0.13

networks:

redis-cluster:

driver: bridge

ipam:

config:

- subnet: 172.28.0.0/16

EOFInitialize the cluster after container startup:

# Start cluster containers

docker-compose -f docker-compose-cluster.yml up -d

# Wait for containers to be healthy

sleep 10

# Create cluster

docker exec redis-node-1 redis-cli \

--cluster create \

172.28.0.11:6379 \

172.28.0.12:6379 \

172.28.0.13:6379 \

--cluster-replicas 0 \

--cluster-yesRedis Sentinel for High Availability

Sentinel provides automatic failover for Redis master replicas:

# docker-compose-sentinel.yml

version: '3.8'

services:

redis-master:

image: redis:7-alpine

container_name: redis-master

ports:

- "6380:6379"

volumes:

- redis-master:/data

command: redis-server --requirepass sentinel-pass

redis-slave:

image: redis:7-alpine

container_name: redis-slave

ports:

- "6381:6379"

volumes:

- redis-slave:/data

command: redis-server --slaveof redis-master 6379 --requirepass sentinel-pass --masterauth sentinel-pass

depends_on:

- redis-master

redis-sentinel-1:

image: redis:7-alpine

container_name: redis-sentinel-1

command: |

redis-server /usr/local/etc/redis/sentinel.conf

--sentinel monitor mymaster redis-master 6379 2

--sentinel down-after-milliseconds mymaster 5000

--sentinel failover-timeout mymaster 10000

volumes:

- ./sentinel.conf:/usr/local/etc/redis/sentinel.conf:ro

depends_on:

- redis-master

- redis-slave

redis-sentinel-2:

image: redis:7-alpine

container_name: redis-sentinel-2

command: |

redis-server /usr/local/etc/redis/sentinel.conf

--sentinel monitor mymaster redis-master 6379 2

--sentinel down-after-milliseconds mymaster 5000

--sentinel failover-timeout mymaster 10000

volumes:

- ./sentinel.conf:/usr/local/etc/redis/sentinel.conf:ro

depends_on:

- redis-master

- redis-slave

volumes:

redis-master:

redis-slave:Sentinel monitors master availability and promotes slaves when masters fail. Multiple sentinels ensure failover occurs even if individual sentinels become unavailable.

Performance Optimization

Containerized Redis requires tuning for optimal performance in networked environments.

Connection Management

Connection pooling in client applications reduces connection overhead:

# Python Redis connection pooling example

import redis

from redis.connection import ConnectionPool

pool = ConnectionPool(

host='redis-server',

port=6379,

db=0,

max_connections=50,

socket_timeout=5,

socket_connect_timeout=5,

decode_responses=True

)

r = redis.Redis(connection_pool=pool)Containerized Redis benefits from increased connection limits:

# Increase connection limits in redis.conf

tcp-backlog 65535

timeout 0

maxclients 10000Latency Considerations

Container networking introduces latency compared to local connections. Minimize hops between Redis and application containers:

# Deploy Redis on same Docker network as applications

docker network create app-network

docker run -d \

--name redis-app \

--network app-network \

redis:7-alpine

docker run -d \

--name api-server \

--network app-network \

myapp:latestHost networking mode reduces Docker network overhead:

# Host networking for lowest latency

docker run -d \

--name redis-host \

--network host \

redis:7-alpineHost networking removes Docker’s network isolation, requiring careful firewall configuration. Use host networking when latency is critical and network isolation is managed elsewhere.

Memory Efficiency

Optimize Redis memory usage through data structure selection and compression:

# Enable memory optimization in redis.conf

hash-max-ziplist-entries 512

hash-max-ziplist-value 64

list-max-ziplist-size -2

set-max-intset-entries 512

zset-max-ziplist-entries 128

zset-max-ziplist-value 64Small hash, list, set, and sorted set objects store compactly using ziplist encoding when element counts and sizes remain small.

Monitoring and Logging

Containerized Redis requires monitoring integration for operational visibility.

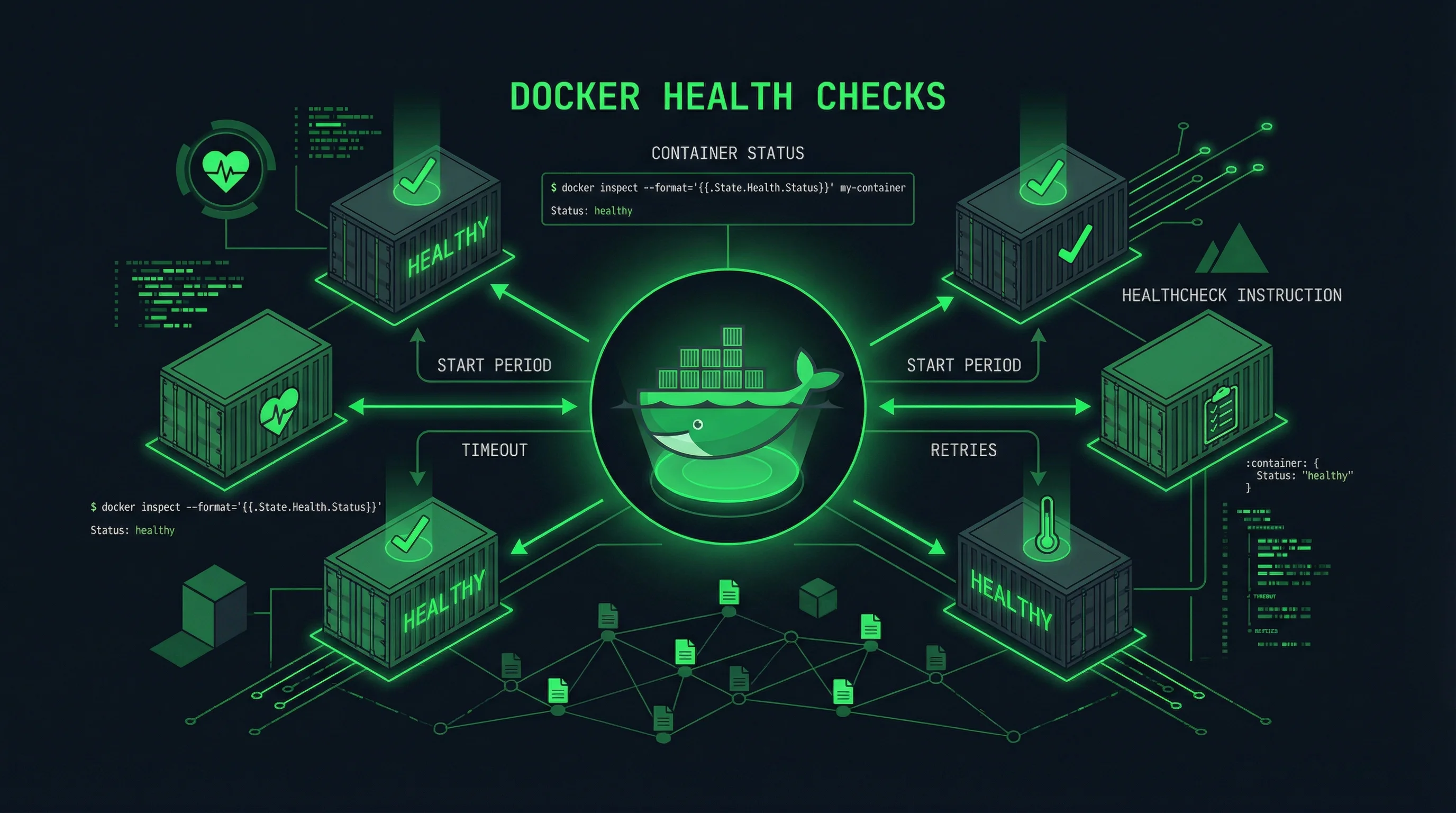

Health Checks

Comprehensive health checks verify Redis functionality:

# Extended health check script

#!/bin/bash

REDIS_HOST=${REDIS_HOST:-localhost}

REDIS_PORT=${REDIS_PORT:-6379}

REDIS_PASSWORD=${REDIS_PASSWORD:-}

RESPONSE=$(redis-cli -h $REDIS_HOST -p $REDIS_PORT \

${REDIS_PASSWORD:+-a $REDIS_PASSWORD} ping)

if [ "$RESPONSE" != "PONG" ]; then

echo "Redis ping failed: $RESPONSE"

exit 1

fi

# Check memory usage

MEMORY=$(redis-cli -h $REDIS_HOST -p $REDIS_PORT \

${REDIS_PASSWORD:+-a $REDIS_PASSWORD} info memory | grep used_memory_human)

echo "Redis OK - $MEMORY"

exit 0Health check results integrate with orchestration platform restart policies and load balancer routing.

Metrics Export

Redis provides built-in metrics accessible via INFO command:

# Extract key metrics

redis-cli INFO | grep -E \

"(connected_clients|used_memory_human|keyspace|uptime_in_seconds|instantaneous_ops_per_sec)"Prometheus exporters enable metric collection and alerting:

# Run Redis exporter alongside Redis

docker run -d \

--name redis-exporter \

--network app-network \

-p 9121:9121 \

oliver006/redis_exporter \

--redis.addr=redis://redis-app:6379Grafana dashboards visualize Redis performance metrics, identifying slow queries, memory pressure, and connection exhaustion before they cause failures.

Backup and Recovery

Redis data requires systematic backup procedures matching recovery time objectives.

AOF and RDB Backup Strategies

Containerized backups use temporary containers that access Redis volumes:

# AOF backup

docker run --rm \

--volumes-from redis-server \

-v $(pwd):/backup \

alpine:latest \

cp /data/appendonly.aof /backup/redis-aof-$(date +%Y%m%d).aof

# RDB backup

docker run --rm \

--volumes-from redis-server \

-v $(pwd):/backup \

alpine:latest \

cp /data/dump.rdb /backup/redis-rdb-$(date +%Y%m%d).rdbSchedule regular backups using cron or orchestration platform scheduling:

# Daily backup script

#!/bin/bash

BACKUP_DIR="/backups/redis"

DATE=$(date +%Y%m%d)

mkdir -p $BACKUP_DIR

docker run --rm \

--volumes-from redis-server \

-v $BACKUP_DIR:/backup \

alpine:latest \

sh -c "cp /data/*.rdb /backup/ && cp /data/appendonly.aof /backup/"

gzip $BACKUP_DIR/*.rdb $BACKUP_DIR/*.aof

find $BACKUP_DIR -name "*.gz" -mtime +7 -deleteRecovery Procedures

Restore backups to recovery volumes:

# Restore RDB file

docker run --rm \

-v redis-recovery:/data \

-v $(pwd):/backup \

alpine:latest \

sh -c "zcat /backup/redis-rdb-20260208.rdb.gz > /data/dump.rdb"

# Start Redis with restored data

docker run -d \

--name redis-recovered \

-p 6379:6379 \

-v redis-recovery:/data \

redis:7-alpineTest recovery procedures regularly to verify backup integrity and recovery documentation.

Conclusion

Dockerized Redis deployments provide flexible, scalable infrastructure for caching, session storage, and messaging workloads. Basic single-instance deployments suit development and non-critical production use cases. Clusters provide horizontal scaling and high availability for demanding applications.

Configuration management, security hardening, and monitoring integration ensure reliable production operations. Backup and recovery procedures protect against data loss. Performance optimization through connection management and network configuration maximizes Redis throughput.

Consider managed Redis services like AWS ElastiCache or Redis Cloud when operational overhead exceeds team capacity. Self-hosted deployments provide maximum control but require ongoing maintenance attention.

Related Posts: