Introduction

Containers are ephemeral by design—they start, run, and stop with no guarantee of persistence. When a container stops or is replaced, its filesystem disappears. This ephemeral nature suits stateless applications perfectly but poses challenges for stateful services like databases, message queues, and file servers. Docker volumes provide the mechanism for persisting data beyond container lifecycles.

Docker volumes decouple data storage from container lifecycles. Volumes exist independently of containers, surviving container removal and recreation. They can be shared between containers, backed up and restored, moved between hosts, and managed through Docker’s CLI or orchestration platforms. Volume management is essential for running stateful applications in containers.

This comprehensive guide covers Docker volume creation, management, and advanced configurations. You will learn to create and configure volumes, implement backup and recovery procedures, migrate data between storage backends, and optimize volume performance for production workloads. Master volume management to confidently deploy databases, message queues, and other stateful services in containerized environments.

Understanding Docker Storage

Docker offers multiple storage mechanisms, each suited to different scenarios.

Volume Types

Docker provides three storage mechanisms for persisting data. Named volumes, managed entirely by Docker, work well for most persistence needs. Bind mounts map host directories into containers, useful for development workflows and specific use cases requiring direct host access. Tmpfs mounts store data in memory, ideal for sensitive data that should not persist on disk.

Named volumes provide the cleanest management experience. Docker creates them automatically, handles permissions, and provides CLI commands for inspection and management. They work consistently across Linux and Windows hosts and integrate well with orchestration platforms.

Bind mounts offer maximum flexibility but require more careful management. You control the host directory completely, enabling scenarios like serving files from host directories or accessing host resources. However, you must manage permissions, paths, and host independence yourself.

Volume Driver Architecture

Docker’s volume plugin system enables storage backends beyond local filesystems. Plugins like Convoy, Rex-Ray, and cloud provider plugins connect Docker to external storage systems. This architecture enables enterprise features like storage arrays, cloud storage services, and distributed filesystems.

The plugin system intercepts volume operations (create, mount, unmount, remove) and translates them to storage-specific commands. Plugins can provide advanced features like snapshots, replication, encryption, and quality-of-service controls that native Docker volumes lack.

Creating and Managing Volumes

Docker CLI provides complete volume management capabilities.

Creating Named Volumes

# Create a simple named volume

docker volume create my_data

# Create volume with specific driver

docker volume create --driver local \

--opt type=none \

--opt o=bind \

--opt device=/path/on/host \

my_bound_volume

# Create volume with capacity limit

docker volume create \

--opt o=size=10G \

my_sized_volume

# Create volume with specific options

docker volume create \

--driver local \

--opt type=xfs \

--opt device=/dev/sdb1 \

my_xfs_volumeUsing Volumes in Containers

# Mount a named volume

docker run -d \

--name myapp \

-v my_data:/var/lib/myapp \

myapp:latest

# Mount with read-only access

docker run -d \

--name readonly-app \

-v config_data:/etc/config:ro \

myapp:latest

# Mount multiple volumes

docker run -d \

--name multi-volume \

-v data_volume:/var/lib/data \

-v log_volume:/var/log/app \

-v config_volume:/etc/myapp:ro \

myapp:latestManaging Volumes

# List all volumes

docker volume ls

# Inspect a volume

docker volume inspect my_data

# Remove unused volumes

docker volume prune

# Remove specific volume (fails if in use)

docker volume rm my_data

# Remove all unused volumes

docker system prune --volumes

# Check volume disk usage

docker system df -vBackup and Restore

Regular backups protect against data loss.

Backup Script

#!/bin/bash

# backup-volume.sh

# Usage: ./backup-volume.sh volume_name backup_filename

VOLUME_NAME=$1

BACKUP_FILE=$2

if [ -z "$VOLUME_NAME" ] || [ -z "$BACKUP_FILE" ]; then

echo "Usage: $0 <volume_name> <backup_file.tar.gz>"

exit 1

fi

docker run --rm \

-v ${VOLUME_NAME}:/data \

-v $(pwd):/backup \

alpine:latest \

tar czf /backup/${BACKUP_FILE} -C /data .

echo "Backup complete: ${BACKUP_FILE}"# Backup a volume

./backup-volume.sh my_data backup-$(date +%Y%m%d).tar.gz

# Incremental backup using rsync

docker run --rm \

-v my_data:/data \

-v $(pwd)/backups:/backup \

alpine:latest \

sh -c "rsync -a /data/ /backup/incremental/$(date +%Y%m%d%H%M%S)/"Restore Script

#!/bin/bash

# restore-volume.sh

# Usage: ./restore-volume.sh volume_name backup_file.tar.gz

VOLUME_NAME=$1

BACKUP_FILE=$2

if [ -z "$VOLUME_NAME" ] || [ -z "$BACKUP_FILE" ]; then

echo "Usage: $0 <volume_name> <backup_file.tar.gz>"

exit 1

fi

if [ ! -f "$BACKUP_FILE" ]; then

echo "Error: Backup file not found: $BACKUP_FILE"

exit 1

fi

docker run --rm \

-v ${VOLUME_NAME}:/data \

-v $(pwd):/backup \

alpine:latest \

tar xzf /backup/${BACKUP_FILE} -C /data

echo "Restore complete"# Restore from backup

./restore-volume.sh my_data backup-20240208.tar.gzDocker Compose Volumes

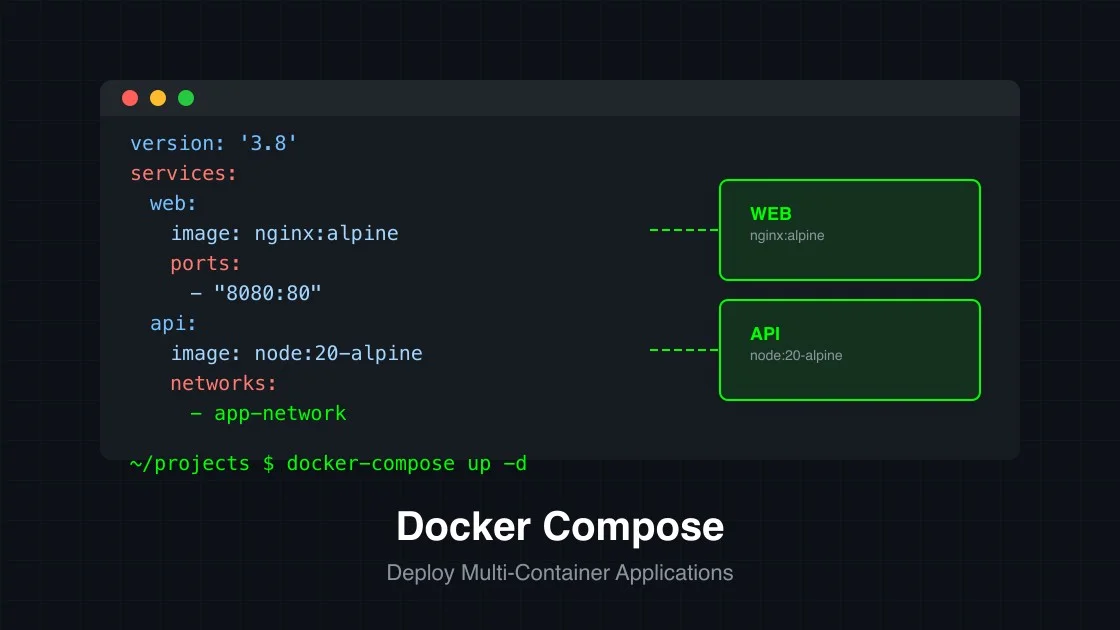

Compose files define volumes alongside services.

Compose Volume Configuration

version: '3.8'

services:

postgres:

image: postgres:15-alpine

container_name: postgres_db

restart: unless-stopped

environment:

POSTGRES_DB: myapp

POSTGRES_USER: appuser

POSTGRES_PASSWORD: ${DB_PASSWORD}

volumes:

- postgres_data:/var/lib/postgresql/data

- ./init-scripts:/docker-entrypoint-initdb.d

- postgres_config:/etc/postgresql

ports:

- "5432:5432"

healthcheck:

test: ["CMD-SHELL", "pg_isready -U $${POSTGRES_USER} -d $${POSTGRES_DB}"]

interval: 10s

timeout: 5s

retries: 5

redis:

image: redis:7-alpine

container_name: redis_cache

volumes:

- redis_data:/data

ports:

- "6379:6379"

app:

build: ./app

container_name: myapp

depends_on:

postgres:

condition: service_healthy

redis:

condition: service_started

volumes:

- app_data:/var/log/myapp

- ./config:/etc/myapp:ro

environment:

- DATABASE_URL=postgres://appuser:password@postgres:5432/myapp

- REDIS_URL=redis://redis:6379

volumes:

postgres_data:

driver: local

driver_opts:

type: none

o: bind

device: /data/postgres

redis_data:

app_data:Volume Templates

Compose supports variable substitution in volumes:

volumes:

app_data:

name: ${APP_NAME:-myapp}_data_${ENVIRONMENT:-dev}Bind Mounts

Bind mounts provide direct host directory access.

Using Bind Mounts

# Mount host directory

docker run -d \

-v /host/path:/container/path \

myapp:latest

# Read-only bind mount

docker run -d \

-v /host/config:/etc/myapp:ro \

myapp:latest

# Docker Compose bind mount

services:

app:

volumes:

- ./local/path:/container/path

- ${PWD}/config:/etc/app:roDevelopment Workflow

Bind mounts excel in development where code changes should reflect immediately:

version: '3.8'

services:

dev:

build: .

volumes:

# Mount source code for hot reload

- ./src:/app/src:ro

# Mount configuration

- ./config:/app/config:ro

# Mount local modules

- node_modules:/app/node_modules

environment:

- NODE_ENV=development

- WATCH=1

command: npm run dev

volumes:

node_modules:Advanced Storage Configurations

Production environments often require advanced storage features.

NFS Volumes

# Create NFS volume

docker volume create \

--driver local \

--opt type=nfs \

--opt o=addr=192.168.1.100,rw \

--opt device=:/exports/nfs_data \

nfs_data

# NFS in Docker Compose

volumes:

nfs_volume:

driver: local

driver_opts:

type: nfs

o: addr=192.168.1.100,rw,nfsvers=4

device: ":/exports/nfs_data"Encrypted Volumes

# Create encrypted volume (requires dm-crypt)

docker volume create \

--driver local \

--opt type=dmcrypt \

--opt device=/dev/sdc1 \

encrypted_dataMultiple Storage Backends

# docker-compose.yml with multiple backends

volumes:

# Local SSD for performance

cache_data:

driver: local

driver_opts:

type: none

o: bind

device: /mnt/ssd/caches

# NFS for shared data

shared_data:

driver: local

driver_opts:

type: nfs

o: addr=nfs.example.com,rw

device::/exports/shared

# Default local storage

app_data:Volume Permissions

Managing permissions prevents access issues.

Permission Issues

Common errors stem from UID/GID mismatches between container processes and host directories:

# Check container user

docker run --rm myapp id

# Create volume with specific ownership

docker run --rm \

-v my_data:/data \

alpine:latest \

chown -R 1000:1000 /data

# Fix permissions on existing volume

docker run --rm \

-v my_data:/data \

alpine:latest \

chown -R 1001:1001 /dataDefining User in Dockerfile

FROM node:20-alpine

# Create group and user

RUN addgroup -g 1000 appgroup && \

adduser -u 1000 -G appgroup -s /bin/sh -D appuser

WORKDIR /app

COPY --chown=appuser:appgroup . .

USER appuser

EXPOSE 3000

CMD ["node", "index.js"]Performance Optimization

Volume performance significantly affects application behavior.

Performance Considerations

Different storage backends provide different performance characteristics:

| Storage Type | Read Performance | Write Performance | Latency |

|---|---|---|---|

| Local SSD | Excellent | Excellent | Low |

| Local HDD | Good | Moderate | Moderate |

| NFS | Good | Moderate | Higher |

| Cloud Storage | Moderate | Moderate | Variable |

| tmpfs | Excellent | Excellent | Very Low |

tmpfs for Sensitive Data

# Create tmpfs mount

docker run -d \

--name sensitive-app \

--tmpfs /run/secrets:size=10M,uid=1000 \

myapp:latest

# Docker Compose tmpfs

services:

app:

image: myapp:latest

tmpfs:

- /run/secrets:size=10M,uid=1000

- /tmp:size=100MI/O Optimization

# Create volume with specific I/O settings (requires appropriate storage)

docker volume create \

--driver local \

--opt type=btrfs \

--opt device=/dev/sdb1 \

btrfs_volume

# Use volume for I/O-intensive operations

docker run -d \

-v iops_volume:/data \

--device-read-iops /dev/sdb1:1000 \

--device-write-iops /dev/sdb1:1000 \

iops_app:latestVolume Migration

Moving data between storage systems requires careful planning.

Migration Between Hosts

# Backup on source host

docker run --rm \

-v old_volume:/data \

-v $(pwd):/backup \

alpine:latest \

tar czf /backup/volume-backup.tar.gz -C /data .

# Transfer to new host

scp volume-backup.tar.gz user@new-host:/tmp/

# Restore on destination host

docker volume create new_volume

docker run --rm \

-v new_volume:/data \

-v /tmp:/backup \

alpine:latest \

tar xzf /backup/volume-backup.tar.gz -C /dataMigration Between Volume Types

# Convert bind mount to named volume

docker run --rm \

-v /old/data:/data:ro \

-v new_volume:/newdata \

alpine:latest \

sh -c "cp -a /data/* /newdata/"

# Migrate from local to NFS

docker run --rm \

-v local_volume:/data \

-v nfs_volume:/nfs:rw \

alpine:latest \

sh -c "cp -a /data/* /nfs/"Monitoring Volume Usage

Track volume consumption to prevent space issues.

Checking Disk Usage

# Docker system disk usage

docker system df -v

# Specific volume usage

docker run --rm \

-v my_volume:/data \

alpine:latest \

du -sh /data

# Find large files in volume

docker run --rm \

-v my_volume:/data \

alpine:latest \

find /data -type f -exec du -h {} \; | sort -rh | head -20Volume Monitoring Script

#!/bin/bash

# monitor-volumes.sh

THRESHOLD=80

for volume in $(docker volume ls -q); do

usage=$(docker run --rm \

-v ${volume}:/data \

alpine:latest \

df /data | awk 'NR==2 {print $5}' | tr -d '%')

if [ "$usage" -gt "$THRESHOLD" ]; then

echo "ALERT: Volume ${volume} is ${usage}% full"

# Send notification

curl -X POST "https://alerts.example.com" \

-d "{\"volume\": \"${volume}\", \"usage\": \"${usage}%\"}"

fi

doneTroubleshooting Common Issues

Volume problems manifest in various ways.

Mount Permission Denied

# Check volume ownership

docker volume inspect my_volume

# Fix permissions

docker run --rm \

-v my_volume:/data \

alpine:latest \

chown -R 1000:1000 /data

# Verify SELinux/AppArmor context

docker run --rm \

-v my_volume:/data:Z \

alpine:latest \

ls -la /dataVolume Not Found

# Verify volume exists

docker volume ls | grep my_volume

# Create missing volume

docker volume create my_volume

# Check for typos in mount paths

docker inspect container_name | jq '.[0].Mounts'Data Loss Prevention

# Never use volumes for critical data without backups

# Always test restore procedures

# Use --volumes-from for container cloning

docker run --rm \

--volumes-from source_container \

-v $(pwd)/backup:/backup \

alpine:latest \

tar czf /backup/container-backup.tar.gz -C / path/in/containerConclusion

Docker volumes provide essential persistence for stateful applications. Start with named volumes for most persistence needs, implement regular backups for critical data, and use bind mounts for development workflows requiring host access. Monitor volume usage and plan capacity to prevent space-related failures.

For production databases and critical services, consider enterprise storage solutions that provide additional features like snapshots, replication, and backups. The volume plugin ecosystem connects Docker to storage systems that meet enterprise requirements for performance, reliability, and management.

Related Guides: